FGNet Results

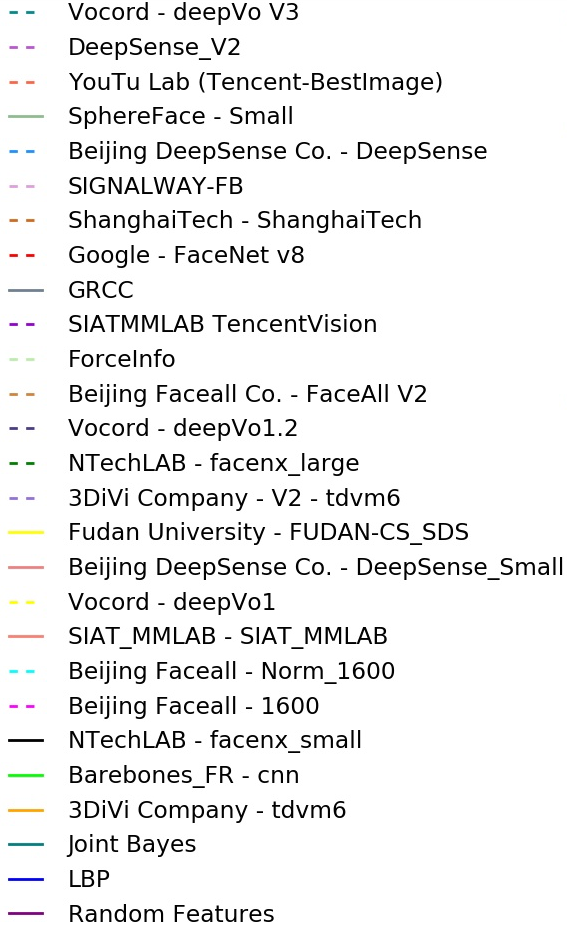

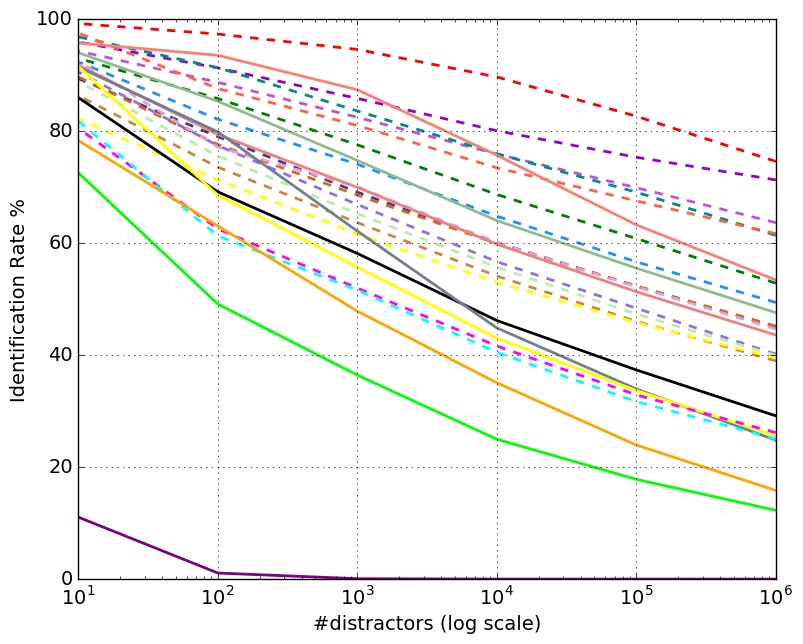

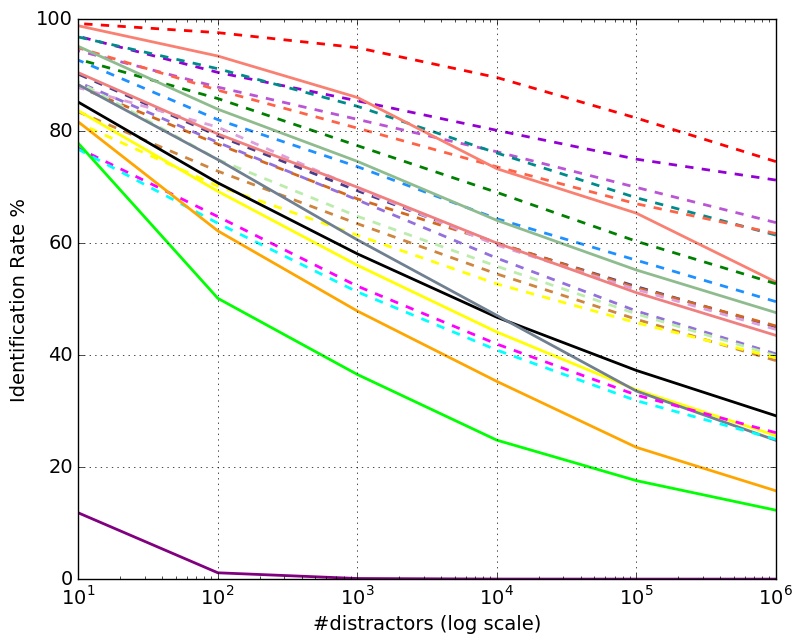

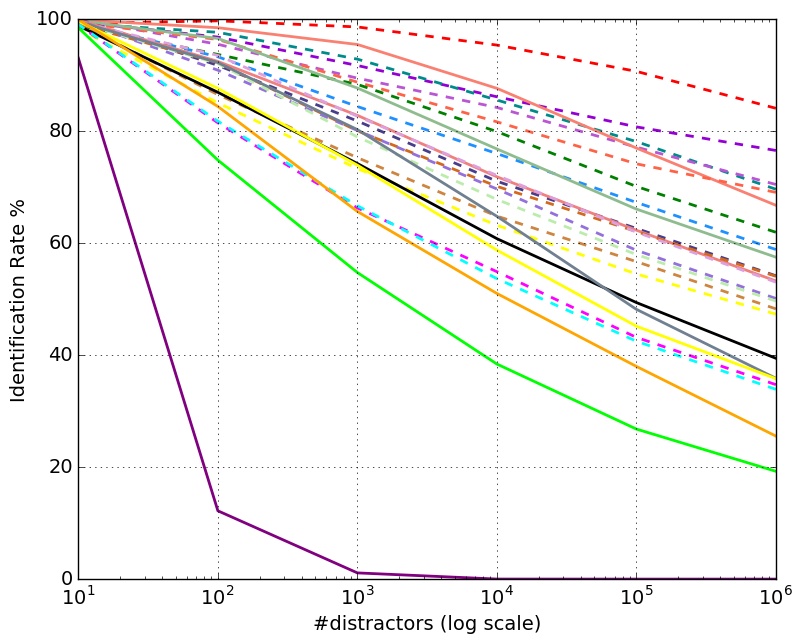

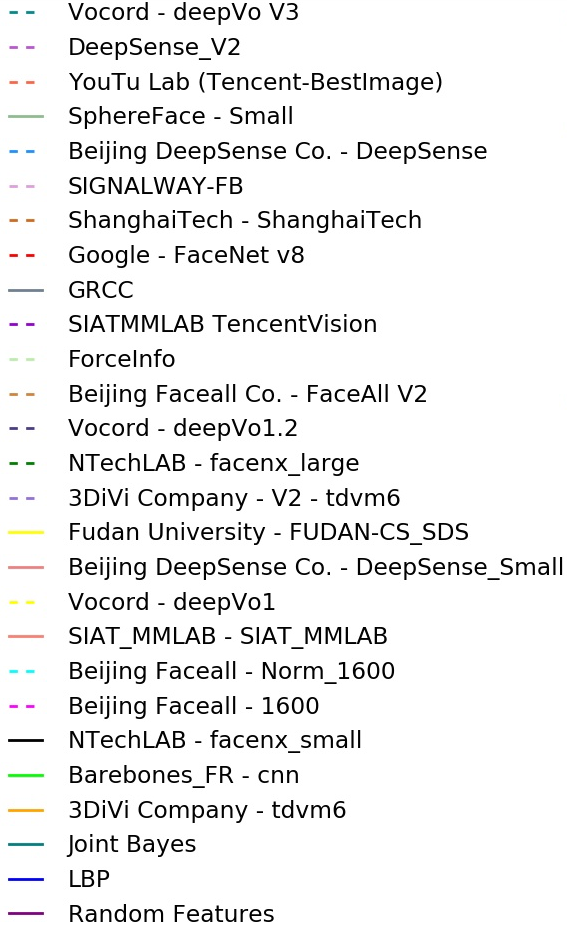

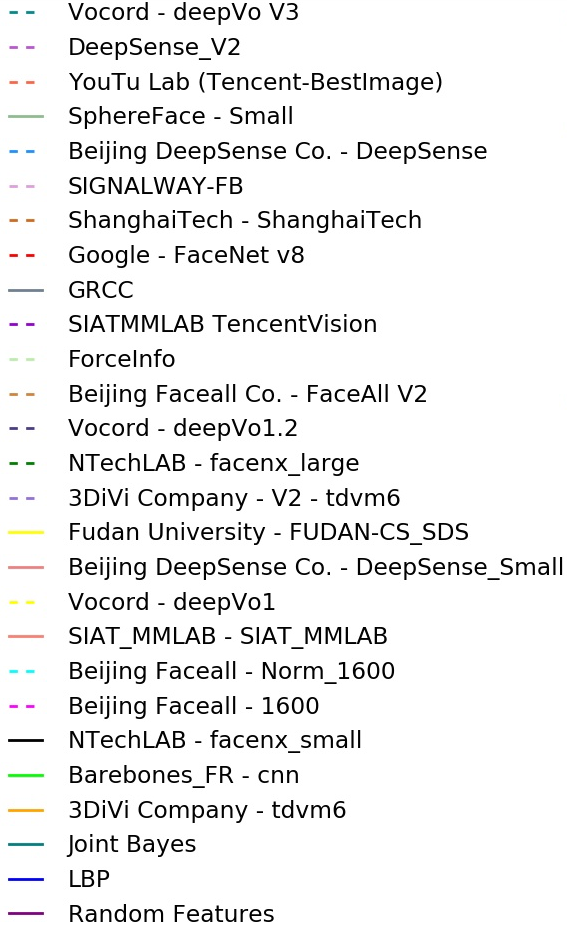

Identification Rate vs. Distractors Size

| Algorithm | Date Submitted | Set 1 | Set 2 | Set 3 | Data Set Size |

|---|---|---|---|---|---|

| THU CV-AI Lab | 12/12/2017 | 77.977% | 77.995% | 77.968% | Large |

| EI Networks | 8/10/2018 | 75.974% | 75.974% | 75.974% | Large |

| Google - FaceNet v8 | 10/23/2015 | 74.594% | 74.585% | 74.558% | Large |

| QINIU ATLAB - FaceX V1 (iBUG cleaned data) | 7/23/2018 | 72.438% | 72.438% | 72.438% | Large |

| SIATMMLAB TencentVision | 12/1/2016 | 71.247% | 71.283% | 71.256% | Large |

| SRC-Beijing-FR(Samsung Research Institute China-Beijing) | 8/15/2018 | 70.0% | 70.0% | 70.0% | Large |

| BingMMLab V1(iBUG cleaned data) | 4/10/2018 | 69.109% | 69.109% | 69.109% | Large |

| BingMMLab-v1 (non-cleaned data) | 4/10/2018 | 69.108% | 69.108% | 69.108% | Large |

| ATLAB-FACEX (QINIU CLOUD) | 6/23/2018 | 68.59% | 68.59% | 68.59% | Large |

| TencentAILab_FaceCNN_v1 | 9/21/2017 | 67.873% | 67.855% | 67.918% | Large |

| iBUG_DeepInsight | 2/8/2018 | 67.476% | 67.512% | 67.575% | Large |

| iBug (Reported by Author) | 04/28/2017 | 66.94% | Small | ||

| FaceTag V1 | 12/18/2017 | 66.086% | 66.185% | 66.149% | Large |

| Yang Sun | 06/05/2017 | 65.491% | 65.599% | 65.563% | Large |

| ULUFace | 5/7/2018 | 64.6878% | 64.6878% | 64.6878% | Large |

| DeepSense V2 | 1/22/2017 | 63.632% | 63.632% | 63.632% | Large |

| YouTu Lab (Tencent Best-Image) | 04/08/2017 | 61.611% | 61.693% | 61.6711% | Large |

| Vocord - deepVo V3 | 04/27/2017 | 61.395% | 61.386% | 61.431% | Large |

| SenseTime PureFace(clean) | 6/13/2018 | 59.003% | 59.003% | 59.003% | Large |

| CVTE V2 | 1/27/2018 | 57.163% | 57.191% | 57.182% | Small |

| Fiberhome AI Research Lab V2(Nanjing) | 8/28/2018 | 57.0462% | 57.0462% | 57.0462% | Large |

| SIAT_MMLAB | 3/29/2016 | 55.304% | 53.41% | 53.049% | Small |

| Intellivision | 2/11/2018 | 54.836% | 54.944% | 54.908% | Large |

| NTechLAB - facenx_large | 10/20/2015 | 52.716% | 52.733% | 52.743% | Large |

| Video++ | 1/5/2018 | 52.03% | 52.048% | 52.003% | Large |

| Faceter Lab | 12/18/2017 | 49.729% | 49.792% | 49.738% | Large |

| DeepSense - Large | 07/31/2016 | 49.368% | 49.396% | 49.549% | Large |

| Progressor | 09/13/2017 | 49.26% | 49.26% | 49.332% | Large |

| SphereFace - Small | 12/1/2016 | 47.555% | 47.582% | 47.591% | Small |

| TUPUTECH | 12/22/2017 | 47.248% | 47.384% | 47.194% | Large |

| Shanghai Tech | 08/13/2016 | 45.209% | 45.182% | 45.209% | Large |

| Vocord-deepVo1.2 | 12/1/2016 | 44.966% | 45.047% | 45.029% | Large |

| DeepSense - Small | 07/31/2016 | 43.54% | 43.576% | 43.522% | Small |

| CVTE | 08/30/2017 | 42.349% | 42.431% | 42.431% | Large |

| 3DiVi Company - tdvm V2 | 04/15/2017 | 40.256% | 40.166% | 40.22% | Large |

| ForceInfo | 04/07/2017 | 39.859% | 39.949% | 39.895% | Large |

| Vocord - DeepVo1 | 08/03/2016 | 39.489% | 39.471% | 39.534% | Large |

| Beijing Faceall Co. - FaceAll V2 | 04/28/2017 | 39.038% | 38.975% | 39.02% | Small |

| XT-tech V2 | 08/30/2017 | 36.855% | 36.945% | 36.918% | Large |

| NTechLAB - facenx_small | 10/20/2015 | 29.168% | 29.15% | 29.168% | Small |

| Beijing Faceall Co. - FaceAll_1600 | 10/19/2015 | 26.155% | 26.155% | 26.137% | Large |

| Fudan University - FUDAN-CS_SDS | 1/29/2017 | 25.568% | 25.559% | 25.623% | Small |

| Beijing Faceall Co. - FaceAll_Norm_1600 | 10/19/2015 | 25.02% | 25.045% | 24.973% | Large |

| GRCCV | 12/1/2016 | 24.783% | 24.802% | 24.783% | Small |

| 3DiVi Company - tdvm6 | 10/27/2015 | 15.78% | 15.825% | 15.77% | Small |

| Barebones_FR - cnn | 10/21/2015 | 7.136% | 10.709% | 10.736% | Small |

Method Details

| Algorithm | Details |

|---|---|

| Orion Star Technology (clean) | We have trained three deep networks (ResNet-101, ResNet-152, ResNet-200) with joint softmax and triplet loss on MS-Celeb-1M (95K identities, 5.1M images), and the triplet part is trained by batch online hard negative mining with subspace learning. The features of all networks are concatenated to produce the final feature, whose dimension is set to be 256x3. For data processing, we use original large images and follow our own system by detection and alignment. Particularly, in evaluation, we have cleaned the FaceScrub and MegaFace with the code released by iBUG_DeepInsight. |

| Orion Star Technology (no clean) | Compared to our another submission named “Orion Star Technology (clean)”, the major difference is that no any data cleaning is adopted in evaluation. |

| SuningUS_AILab | This is a model ensembled by three different models using ResNet CNN and improved ResNet network, learned by a combined loss. A filtered MS-Celeb-1M and CASIA-Webface is used as the dataset. |

| ULSee - Face Team | Joint Face Detection and Alignment using Multi-task Cascaded Convolutional Networks https://arxiv.org/abs/1604.02878 Large-Margin Softmax Loss for Convolutional Neural Networks https://arxiv.org/abs/1612.02295 A Discriminative Deep Feature Learning Approach for Face Recognition https://ydwen.github.io/papers/WenECCV16.pdf NormFace: L2 Hypersphere Embedding for Face Verification https://arxiv.org/abs/1704.06369 SphereFace: Deep Hypersphere Embedding for Face Recognition https://arxiv.org/abs/1704.08063 ArcFace: Additive Angular Margin Loss for Deep Face Recognition https://arxiv.org/abs/1801.07698 |

| SIATMMLAB TencentVision | Adopt the ensemble of very deep CNNs, learned by joint supervision (softmax loss, improved center loss, etc). Training data is a combination of public datasets (CAISA, VGG, CACD2000, etc) and private datasets. The total number of images is more than 2 million. |

| DeepSense - Small | Adopt a network of very deep ResNet CNNs, learned by combined supervision(identification loss(softmax loss), verification loss, triplet loss). |

| GRCCV | The algorithm consists of three parts: FCN - based fast face detection algorithm, pre-training ResNet CNN on classification task, weight tuning. Training set contains 273000 photos. Hardware: 8 x Tesla k80. |

| SphereFace - Small | SphereFace uses a novel approach to learn face features that are discriminative on a hypersphere manifold. The training data set we use in SphereFace is the publicly available CASIA-WebFace dataset which contains 490k images of nearly 10,500 individuals. |

| EM-DATA | arcface, https://github.com/deepinsight/insightface |

| StartDT-AI | we only use a single model trained on a ResNet-28 network joined with cosine loss and triplet loss on MS-Celeb-1M(74K identities, 4M images), refer to DeepVisage: Making face recognition simple yet with powerful generalization skills https://arxiv.org/abs/1703.08388 One-shot Face Recognition by Promoting Underrepresented Classes https://arxiv.org/abs/1707.05574v2 ArcFace: Additive Angular Margin Loss for Deep Face Recognition https://arxiv.org/abs/1801.07698 SphereFace: Deep Hypersphere Embedding for Face Recognition https://arxiv.org/abs/1704.08063 |

| BingMMLab-v1 (non-cleaned data) | Compares to our submissions named “BingMMLab V1(cleaned data)”, the only difference is that no data cleaning is adopted in this evaluation. |

| BingMMLab V1(iBUG cleaned data) | We used knowledge graph to collect identities and then crawled Bing search engine to get high quality images, we filtered noises in 14M training data by clustering with weak face models, and then trained a DensetNet-69 (k=48) network with A-Softmax loss variants. In evaluation, we used the cleaned test set released by iBUG_DeepInsight. DenseNet https://arxiv.org/pdf/1608.06993v2.pdf A-Softmax and its variant: https://arxiv.org/pdf/1704.08063.pdf https://github.com/wy1iu/LargeMargin_Softmax_Loss/issues/13 |

| MTDP_ITC(Clean) | Angular Softmax Loss with Channel-wise Attention |

| Kankan AI Lab | We have trained our model on ResNet-152 with Additive Angular Margin Loss on combined dataset with MS-Celeb-1M and VggFace2, and cleaned the FaceScrub and MegaFace with the lists released by iBUG_DeepInsight. Deep Residual Learning for Image Recognition https://arxiv.org/abs/1512.03385 ArcFace: Additive Angular Margin Loss for Deep Face Recognition https://arxiv.org/abs/1801.07698 https://github.com/deepinsight/insightface VGGFace2: A dataset for recognising faces across pose and age https://arxiv.org/abs/1710.08092 MS-Celeb-1M: A Dataset and Benchmark for Large-Scale Face Recognition https://www.microsoft.com/en-us/research/wp-content/uploads/2016/08/MSCeleb-1M-a.pdf |

| TUPUTECH V1 (iBUG cleaned data) | We have trained ResNet models with a combined loss on MS-Celeb-1M. In evaluation, we have cleaned the FaceScrub and MegaFace with the code released by iBUG_DeepInsight. [iBUG_DeepInsight code](https://github.com/deepinsight/insightface) |

| TUPUTECH v2 | Compares to our submissions named “TUPUTECH v1 (clean)”, the only difference is data cleaning by TUPU is adopted in evaluation. |

| Uface | trained network with arcface loss. tried some different methods in preprocessing data.In evaluation, used the cleaned test set released by iBUG_DeepInsight. |

| ULUFace | Deep Residual Learning for Image Recognition https://arxiv.org/abs/1512.03385v1 MTCNN:Joint Face Detection and Alignment using Multi-task Cascaded Convolutional Networks https://arxiv.org/abs/1604.02878 FaceNet: A Unified Embedding for Face Recognition and Clustering https://arxiv.org/abs/1503.03832 SphereFace: Deep Hypersphere Embedding for Face Recognition https://arxiv.org/abs/1704.08063 Additive Margin Softmax for Face Verification https://arxiv.org/abs/1801.05599v2 ArcFace: Additive Angular Margin Loss for Deep Face Recognition https://arxiv.org/abs/1801.07698v1 |

| ULUFace | Deep Residual Learning for Image Recognition https://arxiv.org/abs/1512.03385v1 MTCNN:Joint Face Detection and Alignment using Multi-task Cascaded Convolutional Networks https://arxiv.org/abs/1604.02878 FaceNet: A Unified Embedding for Face Recognition and Clustering https://arxiv.org/abs/1503.03832 SphereFace: Deep Hypersphere Embedding for Face Recognition https://arxiv.org/abs/1704.08063 Additive Margin Softmax for Face Verification https://arxiv.org/abs/1801.05599v2 ArcFace: Additive Angular Margin Loss for Deep Face Recognition https://arxiv.org/abs/1801.07698v1 |

| Jian24 Vision | we only use a single model trained on a ResNet-101 network joined with cosine loss and triplet loss on MS-Celeb-1M(74K identities, 4M images), refer to Joint Face Detection and Alignment using Multi-task Cascaded Convolutional Networks https://arxiv.org/abs/1604.02878 ArcFace: Additive Angular Margin Loss for Deep Face Recognition https://arxiv.org/abs/1801.07698 SphereFace: Deep Hypersphere Embedding for Face Recognition https://arxiv.org/abs/1704.08063 A Discriminative Deep Feature Learning Approach for Face Recognition https://ydwen.github.io/papers/WenECCV16.pdf |

| sophon | using insightface with loss modification. |

| MSU_Intsys | Custom version of ArcFace. |

| FeelingTech | FeelingFace, which trained with cleaned MS-Celeb-1M dataset |

| KANKAN AI Lab | We have trained our model on ResNet-152 with Additive Angular Margin Loss on combined dataset with MS-Celeb-1M and VggFace2, and cleaned the FaceScrub and MegaFace with the lists released by iBUG_DeepInsight. Deep Residual Learning for Image Recognition https://arxiv.org/abs/1512.03385 ArcFace: Additive Angular Margin Loss for Deep Face Recognition https://arxiv.org/abs/1801.07698 https://github.com/deepinsight/insightface VGGFace2: A dataset for recognising faces across pose and age https://arxiv.org/abs/1710.08092 MS-Celeb-1M: A Dataset and Benchmark for Large-Scale Face Recognition https://www.microsoft.com/en-us/research/wp-content/uploads/2016/08/MSCeleb-1M-a.pdf |

| Sogou | We collected and filtered millions pictures from sogou pic search engine, and also used pictures date cleaned by DeepInsight. We trained multi model by different loss functions such as combined margin loss, margin loss with focal loss and so on. In some models we divided faces into different patches so we can get features represent local feature such as mouth and even teeth. We merge the features get from different models together as the final result. |

| SenseTime PureFace(clean) | We trained the deep residual attention network(attention-56) with A-softmax to learn the face feature. The training dataset is constructed by the novel dataset building techinique, which is critical for us to improve the performance of the model. The results are the cleaned test set performance released by iBUG_DeepInsight. Wang F, Jiang M, Qian C, et al. Residual attention network for image classification[J]. arXiv preprint arXiv:1704.06904, 2017. Deng J, Guo J, Zafeiriou S. ArcFace: Additive Angular Margin Loss for Deep Face Recognition[J]. arXiv preprint arXiv:1801.07698, 2018. Liu W, Wen Y, Yu Z, et al. Sphereface: Deep hypersphere embedding for face recognition[C]//The IEEE Conference on Computer Vision and Pattern Recognition (CVPR). 2017, 1. |

| EM-DATA | arcface, https://github.com/deepinsight/insightface |

| ATLAB-FACEX (QINIU CLOUD) | A single deep Resnet model (the 'r100' configuration from insightface) trained with our own angular margin loss (an improved variant of A-Softmax, not published yet). The training set consists of nearly 6.2M images (about 96K identities). For MegaFace evaluation, we adopted the clean list released by iBUG_DeepInsight. insightface: https://github.com/deepinsight/insightface A-Softmax: https://arxiv.org/pdf/1704.08063.pdf |

| Qsdream | ArcFace: Additive Angular Margin Loss for Deep Face Recognition https://arxiv.org/abs/1801.07698 |

| PingAn AI Lab (Nanjing) | This is a single model trained by a deep ResNet network on MS-Celeb-1M,learned by a cosine loss, refer to ArcFace: Additive Angular Margin Loss for Deep Face Recognition https://arxiv.org/abs/1801.07698 SphereFace: Deep Hypersphere Embedding for Face Recognition https://arxiv.org/abs/1704.08063 We used the noises list proposed by InsightFace, at https://github.com/deepinsight/insightface/tree/master/src/megaface |

| Fiberhome AI Research Lab(Nanjing) | mtcnn: Joint Face Detection and Alignment using Multi-task Cascaded Convolutional Networks https://arxiv.org/abs/1604.02878 https://github.com/pangyupo/mxnet_mtcnn_face_detection ArcFace: Additive Angular Margin Loss for Deep Face Recognition https://arxiv.org/abs/1801.07698 https://github.com/deepinsight/insightface |

| QINIU ATLAB - FaceX V1 (iBUG cleaned data) | This feature model is an ensemble of 3 deep Resnet models (the 'r100' and 'r152' configuration from insightface[1] ) trained with our own angular margin loss (an improved variant of A-Softmax[2] , not published yet). The training set consists of 7 Million images (about 188K identities). For evaluation, we adopted the clean list released by iBUG_DeepInsight[1] . Reference: [1] insightface: https://github.com/deepinsight/insightface [2] A-Softmax: https://arxiv.org/pdf/1704.08063.pdf |

| Visual Computing-Alibaba-V1(clean) | A single model (improved Resnet-152) is trained by the supervision of combined loss functions (A-Softmax loss, center loss, triplet loss et al) on MS-Celeb-1M (84 k identities, 5.2 M images). In evaluation, we use the cleaned FaceScrub and MegaFace released by iBUG_DeepInsight. |

| Beijing Faceall Co. & BUPT(iBug cleaned) | We have trained on the Faceall-msra celebrities dataset with over 4.4 million photos. We use 6 models to ensemble the training result and use cosine distance as the distance metrics. As for loss function, we adopt A-softmax and Additive Angular margin loss during training. We evaluate our result on the iBUG-cleaned version of megaface and facescrub list. Method reference: SphereFace: Deep Hypersphere Embedding for Face Recognition https://arxiv.org/abs/1704.08063 Additive Margin Softmax for Face Verification https://arxiv.org/abs/1801.05599v2 ArcFace: Additive Angular Margin Loss for Deep Face Recognition https://arxiv.org/abs/1801.07698v1 |

| EI Networks | We build a training database of 120,000 identities and 12 million images with combination of public and private databases. We remove the overlap with Facescrub and Fgnet database from our training set. Three deep residual networks are trained (one resnet-150 like and two resnet-100 like) on 112x96 input image with multiple large margin loss functions. Each network is further finetuned using triplet loss. Output feature of three networks is concatenated and trained with metric learning for dimension reduction to a vector of size 512. |

| Uniview Technology | use the fusion model of resnet and googlenet |

| SRC-Beijing-FR(Samsung Research Institute China-Beijing) | An improved loss of sphereface with a large-scale training dataset. |

| 4paradigm | We have trained resnet101 model with large additive margin softmax loss on merged MS-Celeb-1M and Asian-Celeb and fine-tune the model with batchhard triplet loss . In evaluation, we cleaned the FaceScrub and MegaFace using noisy face images released by[1] [1]Deng J, Guo J, Zafeiriou S. ArcFace: Additive Angular Margin Loss for Deep Face Recognition[J]. 2018. |

| 4paradigm | We have trained resnet101 model with combine large margin softmax loss on merged MS-Celeb-1M and Asian-Celeb and fine-tune the model with batchhard triplet loss . In evaluation, we cleaned the FaceScrub and MegaFace using the noises list proposed by InsightFace, at https://github.com/deepinsight/insightface/tree/master/src/megaface |

| Fiberhome AI Research Lab V2(Nanjing) | Compares to our submissions named “Fiberhome AI Research Lab ”,the differences are the following: 1.we changed the face alignment method. 2.we added private datasets to train 3.we adopted a RestNet-50 network joined with cosine loss and Additive Angular Margin Loss |

| CyberLink | Joint Face Detection and Alignment using Multi-task Cascaded Convolutional Networks https://kpzhang93.github.io/MTCNN_face_detection_alignment/index.html Deep Residual Learning for Image Recognition https://arxiv.org/abs/1512.03385v1 Additive Margin Softmax for Face Verification https://arxiv.org/abs/1801.05599v4 ArcFace: Additive Angular Margin Loss for Deep Face Recognition https://arxiv.org/abs/1801.07698v1 SphereFace: Deep Hypersphere Embedding for Face Recognition https://arxiv.org/abs/1704.08063v4 We used the noises list proposed by InsightFace, at https://github.com/deepinsight/insightface/tree/master/src/megaface |

| CyberLink_mobile | Joint Face Detection and Alignment using Multi-task Cascaded Convolutional Networks https://kpzhang93.github.io/MTCNN_face_detection_alignment/index.html MobileFaceNets: Efficient CNNs for Accurate Real-Time Face Verification on Mobile Devices https://arxiv.org/abs/1804.07573v4 Additive Margin Softmax for Face Verification https://arxiv.org/abs/1801.05599v4 ArcFace: Additive Angular Margin Loss for Deep Face Recognition https://arxiv.org/abs/1801.07698v1 SphereFace: Deep Hypersphere Embedding for Face Recognition https://arxiv.org/abs/1704.08063v4 We used the noises list proposed by InsightFace, at https://github.com/deepinsight/insightface/tree/master/src/megaface |

| cyberlink_resnet-v2 | Joint Face Detection and Alignment using Multi-task Cascaded Convolutional Networks https://kpzhang93.github.io/MTCNN_face_detection_alignment/index.html Deep Residual Learning for Image Recognition https://arxiv.org/abs/1512.03385v1 ArcFace: Additive Angular Margin Loss for Deep Face Recognition https://arxiv.org/abs/1801.07698v1 SphereFace: Deep Hypersphere Embedding for Face Recognition https://arxiv.org/abs/1704.08063v4 Additive Margin Softmax for Face Verification https://arxiv.org/abs/1801.05599v4 We used the noises list proposed by InsightFace, at https://github.com/deepinsight/insightface/tree/master/src/megaface |

| Sogou AIGROUP - SFace | We collected and filtered millions pictures from sogou pic search engine and mining one hundred thousand hard negative sample, all data are cleaned by DeepInsight. We trained multi model by different loss functions such as combined margin loss, margin loss with focal loss and so on. In some models we divided faces into different patches so we can get features represent local feature, especially the tooth similarity model. We merge the features get from different models together as the final result. |

| ICARE_FACE_V1 | We have trained our model based on a deep convolutional neural network(ResNet101) with Additive Margin Softmax.We have semi-automatically cleaned the training dataset MSCeleb-1M.Particularly,in evaluation,we cleaned the FaceScrub and MegaFace with the lists released by iBUG_DeepInsight. Additive Margin Softmax for Face Verification https://arxiv.org/abs/1801.05599 Insightface clean list https://github.com/deepinsight/insightface |

- - uses large training set

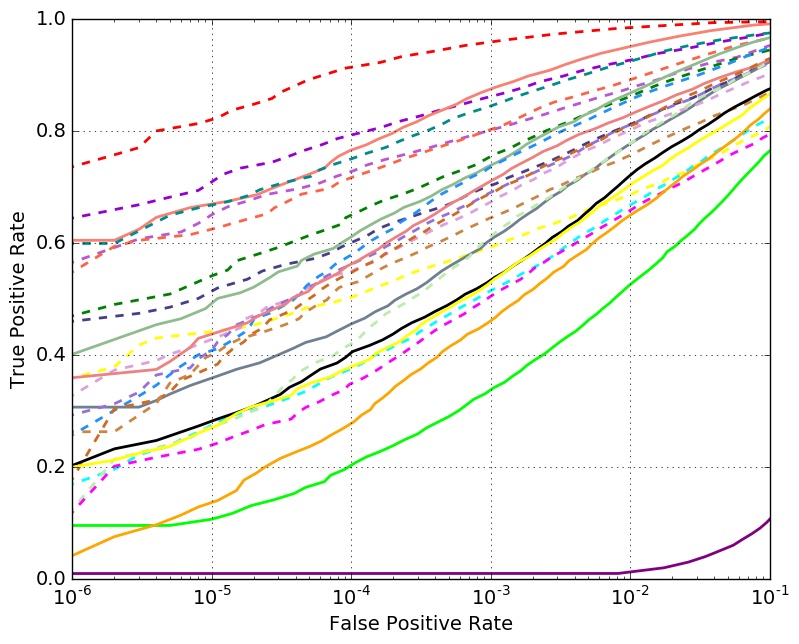

Set 1

Set 1

- - uses large training set

Set 2

Set 2

- - uses large training set

Set 3

Set 3

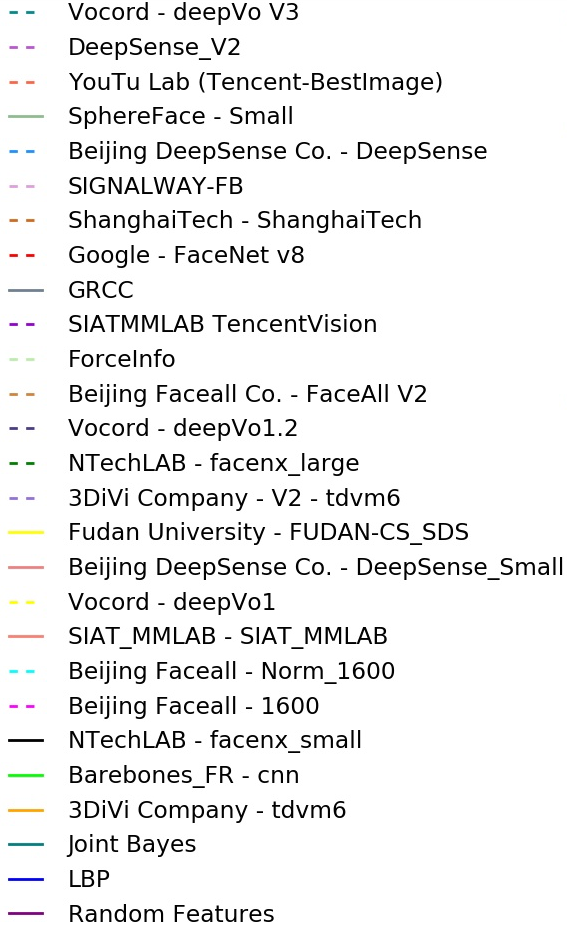

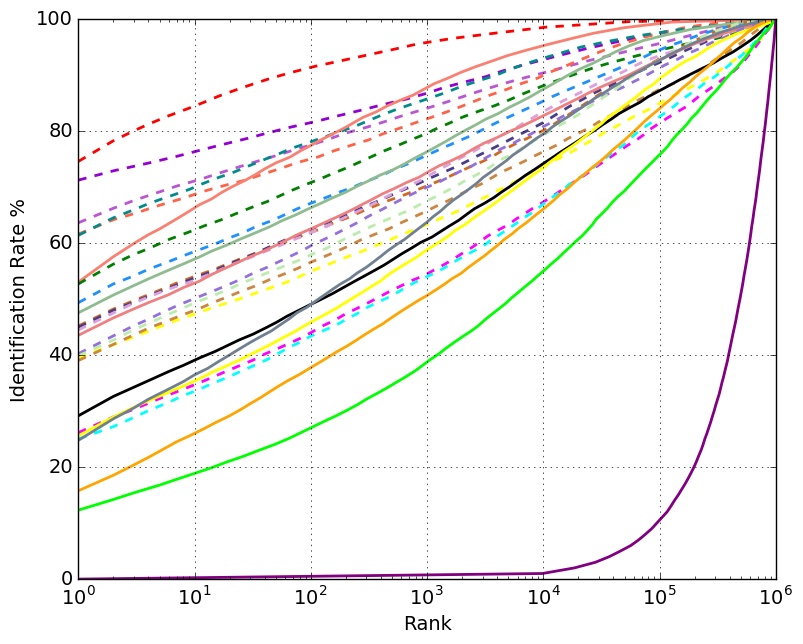

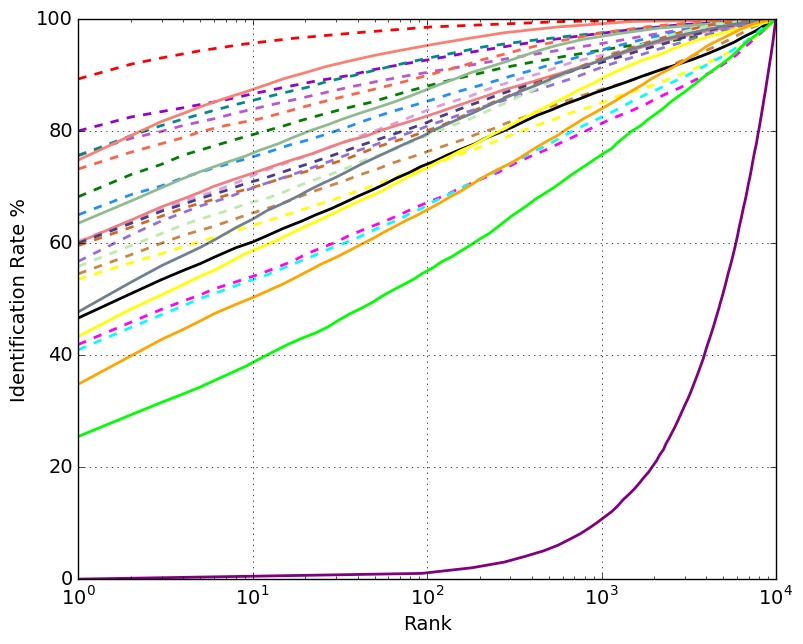

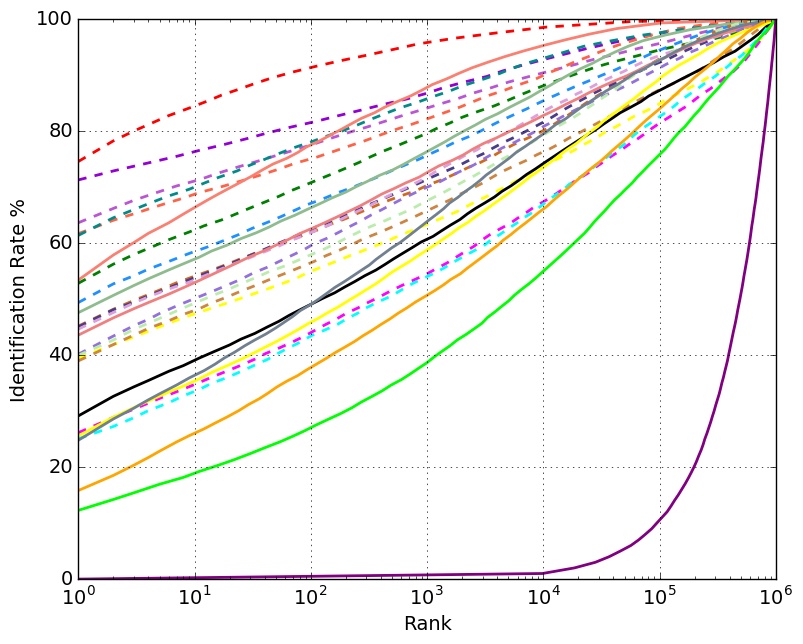

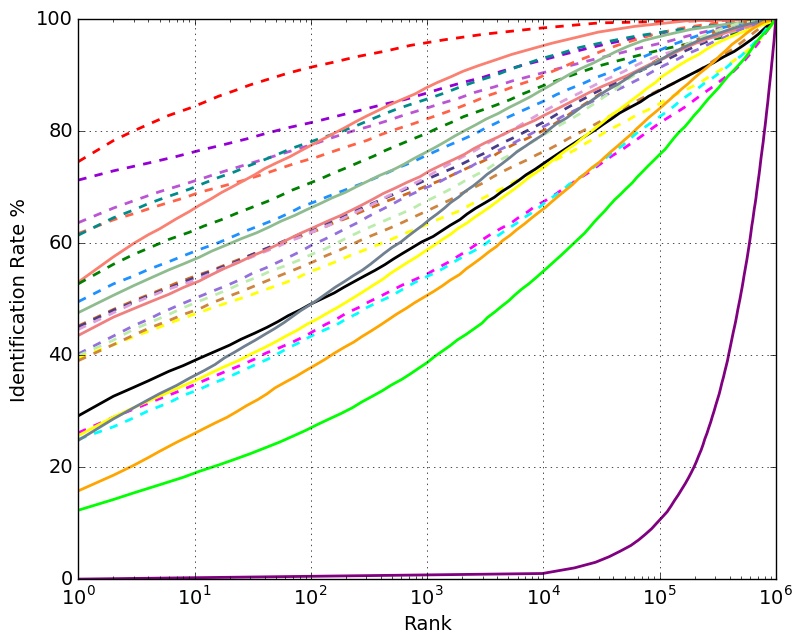

Identification Rank vs. Rank

| Algorithm | Date Submitted | Set 1 | Set 2 | Set 3 | Data Set Size |

|---|---|---|---|---|---|

| THU CV-AI Lab | 12/12/2017 | 77.977% | 77.995% | 77.968% | Large |

| EI Networks | 8/10/2018 | 75.974% | 75.974% | 75.974% | Large |

| Google - FaceNet v8 | 10/23/2015 | 74.594% | 74.585% | 74.558% | Large |

| QINIU ATLAB - FaceX V1 (iBUG cleaned data) | 7/23/2018 | 72.438% | 72.438% | 72.438% | Large |

| SIATMMLAB TencentVision | 12/1/2016 | 71.247% | 71.283% | 71.256% | Large |

| SRC-Beijing-FR(Samsung Research Institute China-Beijing) | 8/15/2018 | 70.0% | 70.0% | 70.0% | Large |

| BingMMLab V1(iBUG cleaned data) | 4/10/2018 | 69.109% | 69.109% | 69.109% | Large |

| BingMMLab-v1 (non-cleaned data) | 4/10/2018 | 69.108% | 69.108% | 69.108% | Large |

| ATLAB-FACEX (QINIU CLOUD) | 6/23/2018 | 68.59% | 68.59% | 68.59% | Large |

| TencentAILab_FaceCNN_v1 | 9/21/2017 | 67.873% | 67.855% | 67.918% | Large |

| iBUG_DeepInsight | 2/8/2018 | 67.476% | 67.512% | 67.575% | Large |

| iBug (Reported by Author) | 04/28/2017 | 66.94% | Small | ||

| FaceTag V1 | 12/18/2017 | 66.086% | 66.185% | 66.149% | Large |

| Yang Sun | 06/05/2017 | 65.491% | 65.599% | 65.563% | Large |

| ULUFace | 5/7/2018 | 64.6878% | 64.6878% | 64.6878% | Large |

| DeepSense V2 | 1/22/2017 | 63.632% | 63.632% | 63.632% | Large |

| YouTu Lab (Tencent Best-Image) | 04/08/2017 | 61.611% | 61.693% | 61.6711% | Large |

| Vocord - deepVo V3 | 04/27/2017 | 61.395% | 61.386% | 61.431% | Large |

| SenseTime PureFace(clean) | 6/13/2018 | 59.003% | 59.003% | 59.003% | Large |

| CVTE V2 | 1/27/2018 | 57.163% | 57.191% | 57.182% | Small |

| Fiberhome AI Research Lab V2(Nanjing) | 8/28/2018 | 57.0462% | 57.0462% | 57.0462% | Large |

| SIAT_MMLAB | 3/29/2016 | 55.304% | 53.41% | 53.049% | Small |

| Intellivision | 2/11/2018 | 54.836% | 54.944% | 54.908% | Large |

| NTechLAB - facenx_large | 10/20/2015 | 52.716% | 52.733% | 52.743% | Large |

| Video++ | 1/5/2018 | 52.03% | 52.048% | 52.003% | Large |

| Faceter Lab | 12/18/2017 | 49.729% | 49.792% | 49.738% | Large |

| DeepSense - Large | 07/31/2016 | 49.368% | 49.396% | 49.549% | Large |

| Progressor | 09/13/2017 | 49.26% | 49.26% | 49.332% | Large |

| SphereFace - Small | 12/1/2016 | 47.555% | 47.582% | 47.591% | Small |

| TUPUTECH | 12/22/2017 | 47.248% | 47.384% | 47.194% | Large |

| Shanghai Tech | 08/13/2016 | 45.209% | 45.182% | 45.209% | Large |

| Vocord-deepVo1.2 | 12/1/2016 | 44.966% | 45.047% | 45.029% | Large |

| DeepSense - Small | 07/31/2016 | 43.54% | 43.576% | 43.522% | Small |

| CVTE | 08/30/2017 | 42.349% | 42.431% | 42.431% | Large |

| 3DiVi Company - tdvm V2 | 04/15/2017 | 40.256% | 40.166% | 40.22% | Large |

| ForceInfo | 04/07/2017 | 39.859% | 39.949% | 39.895% | Large |

| Vocord - DeepVo1 | 08/03/2016 | 39.489% | 39.471% | 39.534% | Large |

| Beijing Faceall Co. - FaceAll V2 | 04/28/2017 | 39.038% | 38.975% | 39.02% | Small |

| XT-tech V2 | 08/30/2017 | 36.855% | 36.945% | 36.918% | Large |

| NTechLAB - facenx_small | 10/20/2015 | 29.168% | 29.15% | 29.168% | Small |

| Beijing Faceall Co. - FaceAll_1600 | 10/19/2015 | 26.155% | 26.155% | 26.137% | Large |

| Fudan University - FUDAN-CS_SDS | 1/29/2017 | 25.568% | 25.559% | 25.623% | Small |

| Beijing Faceall Co. - FaceAll_Norm_1600 | 10/19/2015 | 25.02% | 25.045% | 24.973% | Large |

| GRCCV | 12/1/2016 | 24.783% | 24.802% | 24.783% | Small |

| 3DiVi Company - tdvm6 | 10/27/2015 | 15.78% | 15.825% | 15.77% | Small |

| Barebones_FR - cnn | 10/21/2015 | 7.136% | 10.709% | 10.736% | Small |

Method Details

| Algorithm | Details |

|---|---|

| Orion Star Technology (clean) | We have trained three deep networks (ResNet-101, ResNet-152, ResNet-200) with joint softmax and triplet loss on MS-Celeb-1M (95K identities, 5.1M images), and the triplet part is trained by batch online hard negative mining with subspace learning. The features of all networks are concatenated to produce the final feature, whose dimension is set to be 256x3. For data processing, we use original large images and follow our own system by detection and alignment. Particularly, in evaluation, we have cleaned the FaceScrub and MegaFace with the code released by iBUG_DeepInsight. |

| Orion Star Technology (no clean) | Compared to our another submission named “Orion Star Technology (clean)”, the major difference is that no any data cleaning is adopted in evaluation. |

| SuningUS_AILab | This is a model ensembled by three different models using ResNet CNN and improved ResNet network, learned by a combined loss. A filtered MS-Celeb-1M and CASIA-Webface is used as the dataset. |

| ULSee - Face Team | Joint Face Detection and Alignment using Multi-task Cascaded Convolutional Networks https://arxiv.org/abs/1604.02878 Large-Margin Softmax Loss for Convolutional Neural Networks https://arxiv.org/abs/1612.02295 A Discriminative Deep Feature Learning Approach for Face Recognition https://ydwen.github.io/papers/WenECCV16.pdf NormFace: L2 Hypersphere Embedding for Face Verification https://arxiv.org/abs/1704.06369 SphereFace: Deep Hypersphere Embedding for Face Recognition https://arxiv.org/abs/1704.08063 ArcFace: Additive Angular Margin Loss for Deep Face Recognition https://arxiv.org/abs/1801.07698 |

| SIATMMLAB TencentVision | Adopt the ensemble of very deep CNNs, learned by joint supervision (softmax loss, improved center loss, etc). Training data is a combination of public datasets (CAISA, VGG, CACD2000, etc) and private datasets. The total number of images is more than 2 million. |

| DeepSense - Small | Adopt a network of very deep ResNet CNNs, learned by combined supervision(identification loss(softmax loss), verification loss, triplet loss). |

| GRCCV | The algorithm consists of three parts: FCN - based fast face detection algorithm, pre-training ResNet CNN on classification task, weight tuning. Training set contains 273000 photos. Hardware: 8 x Tesla k80. |

| SphereFace - Small | SphereFace uses a novel approach to learn face features that are discriminative on a hypersphere manifold. The training data set we use in SphereFace is the publicly available CASIA-WebFace dataset which contains 490k images of nearly 10,500 individuals. |

| EM-DATA | arcface, https://github.com/deepinsight/insightface |

| StartDT-AI | we only use a single model trained on a ResNet-28 network joined with cosine loss and triplet loss on MS-Celeb-1M(74K identities, 4M images), refer to DeepVisage: Making face recognition simple yet with powerful generalization skills https://arxiv.org/abs/1703.08388 One-shot Face Recognition by Promoting Underrepresented Classes https://arxiv.org/abs/1707.05574v2 ArcFace: Additive Angular Margin Loss for Deep Face Recognition https://arxiv.org/abs/1801.07698 SphereFace: Deep Hypersphere Embedding for Face Recognition https://arxiv.org/abs/1704.08063 |

| BingMMLab-v1 (non-cleaned data) | Compares to our submissions named “BingMMLab V1(cleaned data)”, the only difference is that no data cleaning is adopted in this evaluation. |

| BingMMLab V1(iBUG cleaned data) | We used knowledge graph to collect identities and then crawled Bing search engine to get high quality images, we filtered noises in 14M training data by clustering with weak face models, and then trained a DensetNet-69 (k=48) network with A-Softmax loss variants. In evaluation, we used the cleaned test set released by iBUG_DeepInsight. DenseNet https://arxiv.org/pdf/1608.06993v2.pdf A-Softmax and its variant: https://arxiv.org/pdf/1704.08063.pdf https://github.com/wy1iu/LargeMargin_Softmax_Loss/issues/13 |

| MTDP_ITC(Clean) | Angular Softmax Loss with Channel-wise Attention |

| Kankan AI Lab | We have trained our model on ResNet-152 with Additive Angular Margin Loss on combined dataset with MS-Celeb-1M and VggFace2, and cleaned the FaceScrub and MegaFace with the lists released by iBUG_DeepInsight. Deep Residual Learning for Image Recognition https://arxiv.org/abs/1512.03385 ArcFace: Additive Angular Margin Loss for Deep Face Recognition https://arxiv.org/abs/1801.07698 https://github.com/deepinsight/insightface VGGFace2: A dataset for recognising faces across pose and age https://arxiv.org/abs/1710.08092 MS-Celeb-1M: A Dataset and Benchmark for Large-Scale Face Recognition https://www.microsoft.com/en-us/research/wp-content/uploads/2016/08/MSCeleb-1M-a.pdf |

| TUPUTECH V1 (iBUG cleaned data) | We have trained ResNet models with a combined loss on MS-Celeb-1M. In evaluation, we have cleaned the FaceScrub and MegaFace with the code released by iBUG_DeepInsight. [iBUG_DeepInsight code](https://github.com/deepinsight/insightface) |

| TUPUTECH v2 | Compares to our submissions named “TUPUTECH v1 (clean)”, the only difference is data cleaning by TUPU is adopted in evaluation. |

| Uface | trained network with arcface loss. tried some different methods in preprocessing data.In evaluation, used the cleaned test set released by iBUG_DeepInsight. |

| ULUFace | Deep Residual Learning for Image Recognition https://arxiv.org/abs/1512.03385v1 MTCNN:Joint Face Detection and Alignment using Multi-task Cascaded Convolutional Networks https://arxiv.org/abs/1604.02878 FaceNet: A Unified Embedding for Face Recognition and Clustering https://arxiv.org/abs/1503.03832 SphereFace: Deep Hypersphere Embedding for Face Recognition https://arxiv.org/abs/1704.08063 Additive Margin Softmax for Face Verification https://arxiv.org/abs/1801.05599v2 ArcFace: Additive Angular Margin Loss for Deep Face Recognition https://arxiv.org/abs/1801.07698v1 |

| ULUFace | Deep Residual Learning for Image Recognition https://arxiv.org/abs/1512.03385v1 MTCNN:Joint Face Detection and Alignment using Multi-task Cascaded Convolutional Networks https://arxiv.org/abs/1604.02878 FaceNet: A Unified Embedding for Face Recognition and Clustering https://arxiv.org/abs/1503.03832 SphereFace: Deep Hypersphere Embedding for Face Recognition https://arxiv.org/abs/1704.08063 Additive Margin Softmax for Face Verification https://arxiv.org/abs/1801.05599v2 ArcFace: Additive Angular Margin Loss for Deep Face Recognition https://arxiv.org/abs/1801.07698v1 |

| Jian24 Vision | we only use a single model trained on a ResNet-101 network joined with cosine loss and triplet loss on MS-Celeb-1M(74K identities, 4M images), refer to Joint Face Detection and Alignment using Multi-task Cascaded Convolutional Networks https://arxiv.org/abs/1604.02878 ArcFace: Additive Angular Margin Loss for Deep Face Recognition https://arxiv.org/abs/1801.07698 SphereFace: Deep Hypersphere Embedding for Face Recognition https://arxiv.org/abs/1704.08063 A Discriminative Deep Feature Learning Approach for Face Recognition https://ydwen.github.io/papers/WenECCV16.pdf |

| sophon | using insightface with loss modification. |

| MSU_Intsys | Custom version of ArcFace. |

| FeelingTech | FeelingFace, which trained with cleaned MS-Celeb-1M dataset |

| KANKAN AI Lab | We have trained our model on ResNet-152 with Additive Angular Margin Loss on combined dataset with MS-Celeb-1M and VggFace2, and cleaned the FaceScrub and MegaFace with the lists released by iBUG_DeepInsight. Deep Residual Learning for Image Recognition https://arxiv.org/abs/1512.03385 ArcFace: Additive Angular Margin Loss for Deep Face Recognition https://arxiv.org/abs/1801.07698 https://github.com/deepinsight/insightface VGGFace2: A dataset for recognising faces across pose and age https://arxiv.org/abs/1710.08092 MS-Celeb-1M: A Dataset and Benchmark for Large-Scale Face Recognition https://www.microsoft.com/en-us/research/wp-content/uploads/2016/08/MSCeleb-1M-a.pdf |

| Sogou | We collected and filtered millions pictures from sogou pic search engine, and also used pictures date cleaned by DeepInsight. We trained multi model by different loss functions such as combined margin loss, margin loss with focal loss and so on. In some models we divided faces into different patches so we can get features represent local feature such as mouth and even teeth. We merge the features get from different models together as the final result. |

| SenseTime PureFace(clean) | We trained the deep residual attention network(attention-56) with A-softmax to learn the face feature. The training dataset is constructed by the novel dataset building techinique, which is critical for us to improve the performance of the model. The results are the cleaned test set performance released by iBUG_DeepInsight. Wang F, Jiang M, Qian C, et al. Residual attention network for image classification[J]. arXiv preprint arXiv:1704.06904, 2017. Deng J, Guo J, Zafeiriou S. ArcFace: Additive Angular Margin Loss for Deep Face Recognition[J]. arXiv preprint arXiv:1801.07698, 2018. Liu W, Wen Y, Yu Z, et al. Sphereface: Deep hypersphere embedding for face recognition[C]//The IEEE Conference on Computer Vision and Pattern Recognition (CVPR). 2017, 1. |

| EM-DATA | arcface, https://github.com/deepinsight/insightface |

| ATLAB-FACEX (QINIU CLOUD) | A single deep Resnet model (the 'r100' configuration from insightface) trained with our own angular margin loss (an improved variant of A-Softmax, not published yet). The training set consists of nearly 6.2M images (about 96K identities). For MegaFace evaluation, we adopted the clean list released by iBUG_DeepInsight. insightface: https://github.com/deepinsight/insightface A-Softmax: https://arxiv.org/pdf/1704.08063.pdf |

| Qsdream | ArcFace: Additive Angular Margin Loss for Deep Face Recognition https://arxiv.org/abs/1801.07698 |

| PingAn AI Lab (Nanjing) | This is a single model trained by a deep ResNet network on MS-Celeb-1M,learned by a cosine loss, refer to ArcFace: Additive Angular Margin Loss for Deep Face Recognition https://arxiv.org/abs/1801.07698 SphereFace: Deep Hypersphere Embedding for Face Recognition https://arxiv.org/abs/1704.08063 We used the noises list proposed by InsightFace, at https://github.com/deepinsight/insightface/tree/master/src/megaface |

| Fiberhome AI Research Lab(Nanjing) | mtcnn: Joint Face Detection and Alignment using Multi-task Cascaded Convolutional Networks https://arxiv.org/abs/1604.02878 https://github.com/pangyupo/mxnet_mtcnn_face_detection ArcFace: Additive Angular Margin Loss for Deep Face Recognition https://arxiv.org/abs/1801.07698 https://github.com/deepinsight/insightface |

| QINIU ATLAB - FaceX V1 (iBUG cleaned data) | This feature model is an ensemble of 3 deep Resnet models (the 'r100' and 'r152' configuration from insightface[1] ) trained with our own angular margin loss (an improved variant of A-Softmax[2] , not published yet). The training set consists of 7 Million images (about 188K identities). For evaluation, we adopted the clean list released by iBUG_DeepInsight[1] . Reference: [1] insightface: https://github.com/deepinsight/insightface [2] A-Softmax: https://arxiv.org/pdf/1704.08063.pdf |

| Visual Computing-Alibaba-V1(clean) | A single model (improved Resnet-152) is trained by the supervision of combined loss functions (A-Softmax loss, center loss, triplet loss et al) on MS-Celeb-1M (84 k identities, 5.2 M images). In evaluation, we use the cleaned FaceScrub and MegaFace released by iBUG_DeepInsight. |

| Beijing Faceall Co. & BUPT(iBug cleaned) | We have trained on the Faceall-msra celebrities dataset with over 4.4 million photos. We use 6 models to ensemble the training result and use cosine distance as the distance metrics. As for loss function, we adopt A-softmax and Additive Angular margin loss during training. We evaluate our result on the iBUG-cleaned version of megaface and facescrub list. Method reference: SphereFace: Deep Hypersphere Embedding for Face Recognition https://arxiv.org/abs/1704.08063 Additive Margin Softmax for Face Verification https://arxiv.org/abs/1801.05599v2 ArcFace: Additive Angular Margin Loss for Deep Face Recognition https://arxiv.org/abs/1801.07698v1 |

| EI Networks | We build a training database of 120,000 identities and 12 million images with combination of public and private databases. We remove the overlap with Facescrub and Fgnet database from our training set. Three deep residual networks are trained (one resnet-150 like and two resnet-100 like) on 112x96 input image with multiple large margin loss functions. Each network is further finetuned using triplet loss. Output feature of three networks is concatenated and trained with metric learning for dimension reduction to a vector of size 512. |

| Uniview Technology | use the fusion model of resnet and googlenet |

| SRC-Beijing-FR(Samsung Research Institute China-Beijing) | An improved loss of sphereface with a large-scale training dataset. |

| 4paradigm | We have trained resnet101 model with large additive margin softmax loss on merged MS-Celeb-1M and Asian-Celeb and fine-tune the model with batchhard triplet loss . In evaluation, we cleaned the FaceScrub and MegaFace using noisy face images released by[1] [1]Deng J, Guo J, Zafeiriou S. ArcFace: Additive Angular Margin Loss for Deep Face Recognition[J]. 2018. |

| 4paradigm | We have trained resnet101 model with combine large margin softmax loss on merged MS-Celeb-1M and Asian-Celeb and fine-tune the model with batchhard triplet loss . In evaluation, we cleaned the FaceScrub and MegaFace using the noises list proposed by InsightFace, at https://github.com/deepinsight/insightface/tree/master/src/megaface |

| Fiberhome AI Research Lab V2(Nanjing) | Compares to our submissions named “Fiberhome AI Research Lab ”,the differences are the following: 1.we changed the face alignment method. 2.we added private datasets to train 3.we adopted a RestNet-50 network joined with cosine loss and Additive Angular Margin Loss |

| CyberLink | Joint Face Detection and Alignment using Multi-task Cascaded Convolutional Networks https://kpzhang93.github.io/MTCNN_face_detection_alignment/index.html Deep Residual Learning for Image Recognition https://arxiv.org/abs/1512.03385v1 Additive Margin Softmax for Face Verification https://arxiv.org/abs/1801.05599v4 ArcFace: Additive Angular Margin Loss for Deep Face Recognition https://arxiv.org/abs/1801.07698v1 SphereFace: Deep Hypersphere Embedding for Face Recognition https://arxiv.org/abs/1704.08063v4 We used the noises list proposed by InsightFace, at https://github.com/deepinsight/insightface/tree/master/src/megaface |

| CyberLink_mobile | Joint Face Detection and Alignment using Multi-task Cascaded Convolutional Networks https://kpzhang93.github.io/MTCNN_face_detection_alignment/index.html MobileFaceNets: Efficient CNNs for Accurate Real-Time Face Verification on Mobile Devices https://arxiv.org/abs/1804.07573v4 Additive Margin Softmax for Face Verification https://arxiv.org/abs/1801.05599v4 ArcFace: Additive Angular Margin Loss for Deep Face Recognition https://arxiv.org/abs/1801.07698v1 SphereFace: Deep Hypersphere Embedding for Face Recognition https://arxiv.org/abs/1704.08063v4 We used the noises list proposed by InsightFace, at https://github.com/deepinsight/insightface/tree/master/src/megaface |

| cyberlink_resnet-v2 | Joint Face Detection and Alignment using Multi-task Cascaded Convolutional Networks https://kpzhang93.github.io/MTCNN_face_detection_alignment/index.html Deep Residual Learning for Image Recognition https://arxiv.org/abs/1512.03385v1 ArcFace: Additive Angular Margin Loss for Deep Face Recognition https://arxiv.org/abs/1801.07698v1 SphereFace: Deep Hypersphere Embedding for Face Recognition https://arxiv.org/abs/1704.08063v4 Additive Margin Softmax for Face Verification https://arxiv.org/abs/1801.05599v4 We used the noises list proposed by InsightFace, at https://github.com/deepinsight/insightface/tree/master/src/megaface |

| Sogou AIGROUP - SFace | We collected and filtered millions pictures from sogou pic search engine and mining one hundred thousand hard negative sample, all data are cleaned by DeepInsight. We trained multi model by different loss functions such as combined margin loss, margin loss with focal loss and so on. In some models we divided faces into different patches so we can get features represent local feature, especially the tooth similarity model. We merge the features get from different models together as the final result. |

| ICARE_FACE_V1 | We have trained our model based on a deep convolutional neural network(ResNet101) with Additive Margin Softmax.We have semi-automatically cleaned the training dataset MSCeleb-1M.Particularly,in evaluation,we cleaned the FaceScrub and MegaFace with the lists released by iBUG_DeepInsight. Additive Margin Softmax for Face Verification https://arxiv.org/abs/1801.05599 Insightface clean list https://github.com/deepinsight/insightface |

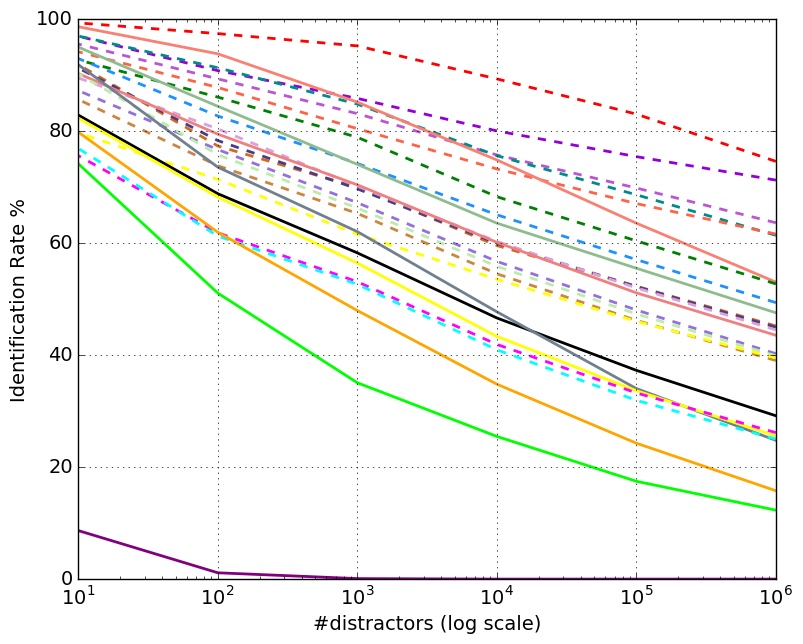

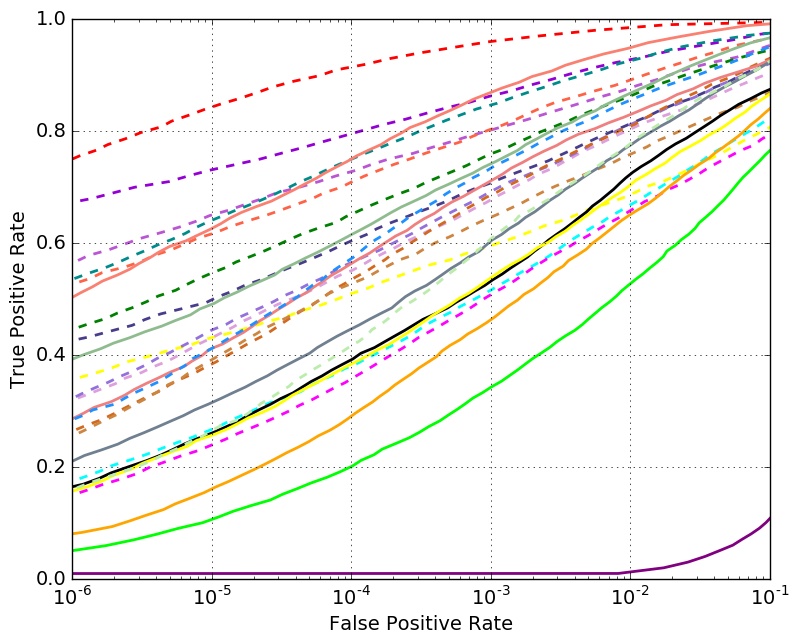

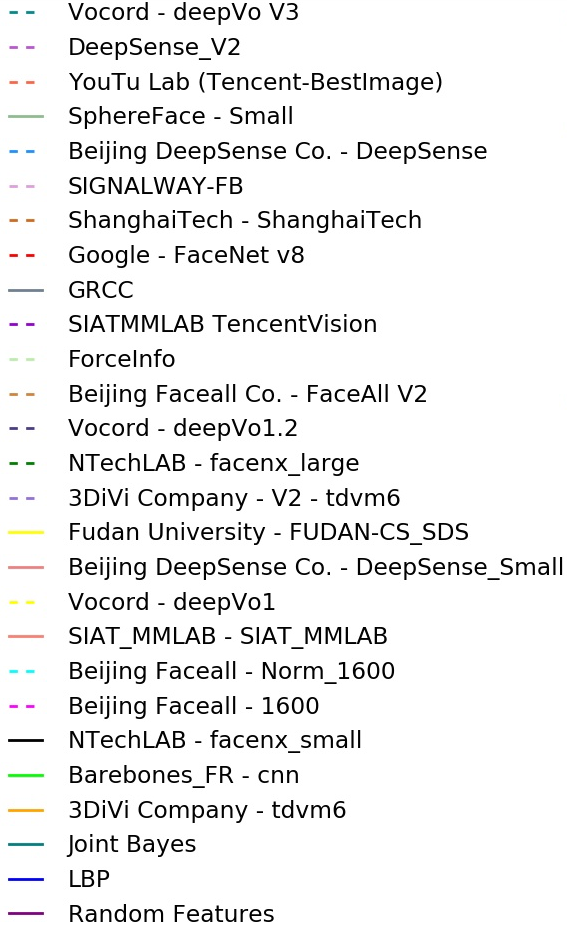

Identification Performance with 1 Million Distractors

Identification Performance with 10K Distractors

- - uses large training set

Set 1

Set 1

- - uses large training set

Set 2

Set 2

- - uses large training set

Set 3

Set 3

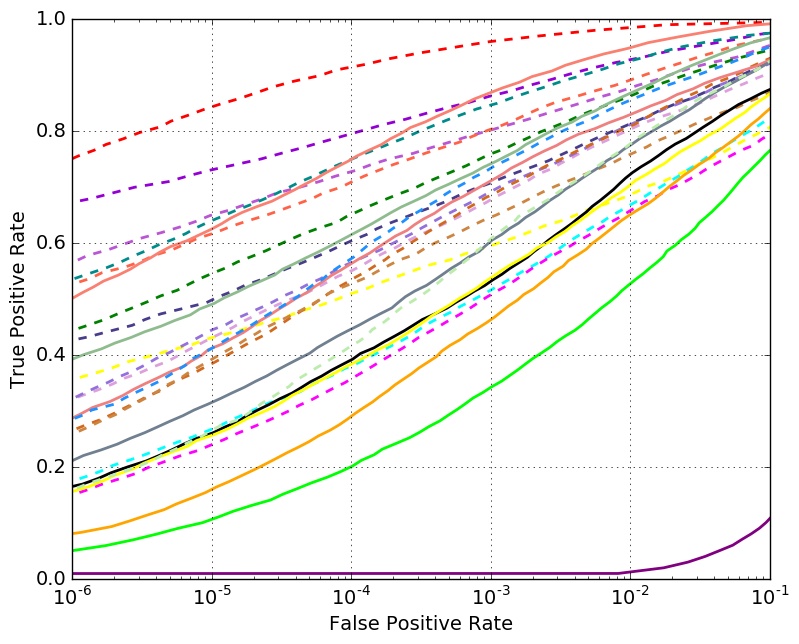

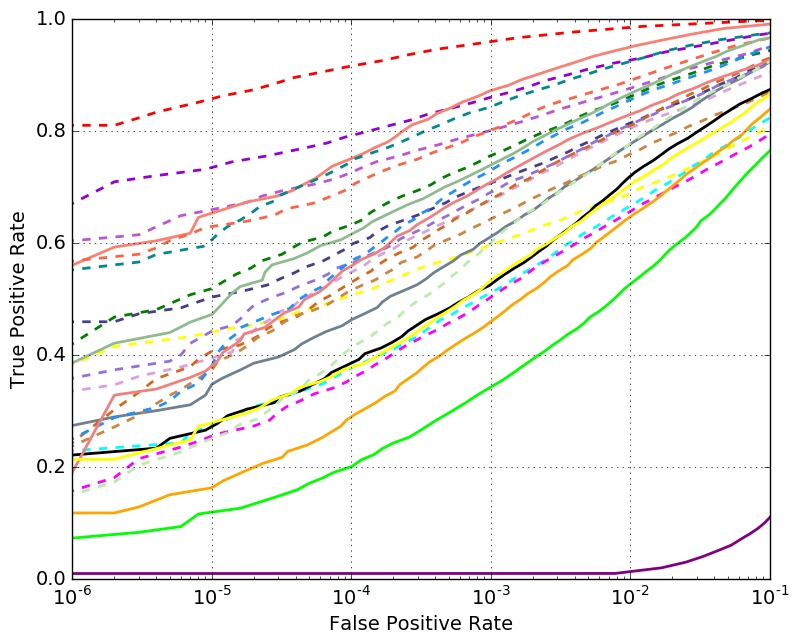

Verification

| Algorithm | Date Submitted | Set 1 | Set 2 | Set 3 | Data Set Size |

|---|---|---|---|---|---|

| Google - FaceNet v8 | 10/23/2015 | 75.55% | 75.55% | 75.55% | Large |

| EI Networks | 8/10/2018 | 70.119% | 70.119% | 70.119% | Large |

| QINIU ATLAB - FaceX V1 (iBUG cleaned data) | 7/23/2018 | 69.957% | 69.957% | 69.957% | Large |

| SRC-Beijing-FR(Samsung Research Institute China-Beijing) | 8/15/2018 | 69.0% | 69.0% | 69.0% | Large |

| THU CV-AI Lab | 12/12/2017 | 68.261% | 68.513% | 68.188% | Large |

| SIATMMLAB TencentVision | 12/1/2016 | 67.954% | 67.954% | 67.954% | Large |

| FaceTag V1 | 12/18/2017 | 65.789% | 65.789% | 65.789% | Large |

| TencentAILab_FaceCNN_v1 | 9/21/2017 | 64.886% | 64.724% | 64.724% | Large |

| ULUFace | 5/7/2018 | 64.6878% | 64.6878% | 64.6878% | Large |

| iBUG_DeepInsight | 2/8/2018 | 64.291% | 64.291% | 64.291% | Large |

| Yang Sun | 06/05/2017 | 63.623% | 64.688% | 65.247% | Large |

| ATLAB-FACEX (QINIU CLOUD) | 6/23/2018 | 61.29% | 61.29% | 61.29% | Large |

| BingMMLab V1(iBUG cleaned data) | 4/10/2018 | 59.78% | 59.78% | 59.78% | Large |

| BingMMLab-v1 (non-cleaned data) | 4/10/2018 | 59.78% | 59.78% | 59.78% | Large |

| DeepSense V2 | 1/22/2017 | 56.767% | 56.767% | 56.767% | Large |

| SenseTime PureFace(clean) | 6/13/2018 | 54.835% | 54.835% | 54.835% | Large |

| Fiberhome AI Research Lab V2(Nanjing) | 8/28/2018 | 54.529% | 54.529% | 54.529% | Large |

| YouTu Lab (Tencent Best-Image) | 04/08/2017 | 53.681% | 53.681% | 53.681% | Large |

| Vocord - deepVo V3 | 04/27/2017 | 53.573% | 53.573% | 54.637% | Large |

| CVTE V2 | 1/27/2018 | 53.338% | 53.338% | 53.338% | Small |

| SIAT_MMLAB | 3/29/2016 | 50.144% | 51.155% | 51.155% | Small |

| TUPUTECH | 12/22/2017 | 45.976% | 45.976% | 45.976% | Large |

| NTechLAB - facenx_large | 10/20/2015 | 45.381% | 45.507% | 44.37% | Large |

| iBug (Reported by Author) | 04/28/2017 | 44.947% | Small | ||

| Intellivision | 2/11/2018 | 43.793% | 44.894% | 43.793% | Large |

| Vocord-deepVo1.2 | 12/1/2016 | 43.252% | 43.252% | 43.252% | Large |

| SphereFace - Small | 12/1/2016 | 40.094% | 40.094% | 40.094% | Small |

| Video++ | 1/5/2018 | 35.709% | 34.645% | 34.645% | Large |

| Vocord - DeepVo1 | 08/03/2016 | 35.709% | 35.709% | 35.709% | Large |

| 3DiVi Company - tdvm V2 | 04/15/2017 | 33.075% | 33.075% | 33.075% | Large |

| CVTE | 08/30/2017 | 32.281% | 32.064% | 32.064% | Large |

| Faceter Lab | 12/18/2017 | 30.982% | 30.982% | 30.982% | Large |

| Progressor | 09/13/2017 | 29.61% | 29.61% | 29.61% | Large |

| DeepSense - Small | 07/31/2016 | 29.61% | 29.61% | 29.61% | Small |

| DeepSense - Large | 07/31/2016 | 29.177% | 29.177% | 29.177% | Large |

| Shanghai Tech | 08/13/2016 | 27.463% | 26.416% | 26.416% | Large |

| Beijing Faceall Co. - FaceAll V2 | 04/28/2017 | 26.54% | 26.54% | 26.54% | Small |

| GRCCV | 12/1/2016 | 22.068% | 22.068% | 22.068% | Small |

| Beijing Faceall Co. - FaceAll_Norm_1600 | 10/19/2015 | 18.243% | 18.243% | 18.243% | Large |

| NTechLAB - facenx_small | 10/20/2015 | 16.961% | 16.961% | 16.961% | Small |

| Fudan University - FUDAN-CS_SDS | 1/29/2017 | 16.528% | 16.528% | 16.528% | Small |

| ForceInfo | 04/07/2017 | 16.059% | 16.059% | 16.059% | Large |

| Beijing Faceall Co. - FaceAll_1600 | 10/19/2015 | 15.843% | 15.843% | 15.843% | Large |

| XT-tech V2 | 08/30/2017 | 13.587% | 13.587% | 13.587% | Large |

| 3DiVi Company - tdvm6 | 10/27/2015 | 8.336% | 8.336% | 8.336% | Small |

| Barebones_FR - cnn | 10/21/2015 | 4.764% | 5.792% | 5.792% | Small |

Method Details

| Algorithm | Details |

|---|---|

| Orion Star Technology (clean) | We have trained three deep networks (ResNet-101, ResNet-152, ResNet-200) with joint softmax and triplet loss on MS-Celeb-1M (95K identities, 5.1M images), and the triplet part is trained by batch online hard negative mining with subspace learning. The features of all networks are concatenated to produce the final feature, whose dimension is set to be 256x3. For data processing, we use original large images and follow our own system by detection and alignment. Particularly, in evaluation, we have cleaned the FaceScrub and MegaFace with the code released by iBUG_DeepInsight. |

| Orion Star Technology (no clean) | Compared to our another submission named “Orion Star Technology (clean)”, the major difference is that no any data cleaning is adopted in evaluation. |

| SuningUS_AILab | This is a model ensembled by three different models using ResNet CNN and improved ResNet network, learned by a combined loss. A filtered MS-Celeb-1M and CASIA-Webface is used as the dataset. |

| ULSee - Face Team | Joint Face Detection and Alignment using Multi-task Cascaded Convolutional Networks https://arxiv.org/abs/1604.02878 Large-Margin Softmax Loss for Convolutional Neural Networks https://arxiv.org/abs/1612.02295 A Discriminative Deep Feature Learning Approach for Face Recognition https://ydwen.github.io/papers/WenECCV16.pdf NormFace: L2 Hypersphere Embedding for Face Verification https://arxiv.org/abs/1704.06369 SphereFace: Deep Hypersphere Embedding for Face Recognition https://arxiv.org/abs/1704.08063 ArcFace: Additive Angular Margin Loss for Deep Face Recognition https://arxiv.org/abs/1801.07698 |

| SIATMMLAB TencentVision | Adopt the ensemble of very deep CNNs, learned by joint supervision (softmax loss, improved center loss, etc). Training data is a combination of public datasets (CAISA, VGG, CACD2000, etc) and private datasets. The total number of images is more than 2 million. |

| DeepSense - Small | Adopt a network of very deep ResNet CNNs, learned by combined supervision(identification loss(softmax loss), verification loss, triplet loss). |

| GRCCV | The algorithm consists of three parts: FCN - based fast face detection algorithm, pre-training ResNet CNN on classification task, weight tuning. Training set contains 273000 photos. Hardware: 8 x Tesla k80. |

| SphereFace - Small | SphereFace uses a novel approach to learn face features that are discriminative on a hypersphere manifold. The training data set we use in SphereFace is the publicly available CASIA-WebFace dataset which contains 490k images of nearly 10,500 individuals. |

| EM-DATA | arcface, https://github.com/deepinsight/insightface |

| StartDT-AI | we only use a single model trained on a ResNet-28 network joined with cosine loss and triplet loss on MS-Celeb-1M(74K identities, 4M images), refer to DeepVisage: Making face recognition simple yet with powerful generalization skills https://arxiv.org/abs/1703.08388 One-shot Face Recognition by Promoting Underrepresented Classes https://arxiv.org/abs/1707.05574v2 ArcFace: Additive Angular Margin Loss for Deep Face Recognition https://arxiv.org/abs/1801.07698 SphereFace: Deep Hypersphere Embedding for Face Recognition https://arxiv.org/abs/1704.08063 |

| BingMMLab-v1 (non-cleaned data) | Compares to our submissions named “BingMMLab V1(cleaned data)”, the only difference is that no data cleaning is adopted in this evaluation. |

| BingMMLab V1(iBUG cleaned data) | We used knowledge graph to collect identities and then crawled Bing search engine to get high quality images, we filtered noises in 14M training data by clustering with weak face models, and then trained a DensetNet-69 (k=48) network with A-Softmax loss variants. In evaluation, we used the cleaned test set released by iBUG_DeepInsight. DenseNet https://arxiv.org/pdf/1608.06993v2.pdf A-Softmax and its variant: https://arxiv.org/pdf/1704.08063.pdf https://github.com/wy1iu/LargeMargin_Softmax_Loss/issues/13 |

| MTDP_ITC(Clean) | Angular Softmax Loss with Channel-wise Attention |

| Kankan AI Lab | We have trained our model on ResNet-152 with Additive Angular Margin Loss on combined dataset with MS-Celeb-1M and VggFace2, and cleaned the FaceScrub and MegaFace with the lists released by iBUG_DeepInsight. Deep Residual Learning for Image Recognition https://arxiv.org/abs/1512.03385 ArcFace: Additive Angular Margin Loss for Deep Face Recognition https://arxiv.org/abs/1801.07698 https://github.com/deepinsight/insightface VGGFace2: A dataset for recognising faces across pose and age https://arxiv.org/abs/1710.08092 MS-Celeb-1M: A Dataset and Benchmark for Large-Scale Face Recognition https://www.microsoft.com/en-us/research/wp-content/uploads/2016/08/MSCeleb-1M-a.pdf |

| TUPUTECH V1 (iBUG cleaned data) | We have trained ResNet models with a combined loss on MS-Celeb-1M. In evaluation, we have cleaned the FaceScrub and MegaFace with the code released by iBUG_DeepInsight. [iBUG_DeepInsight code](https://github.com/deepinsight/insightface) |

| TUPUTECH v2 | Compares to our submissions named “TUPUTECH v1 (clean)”, the only difference is data cleaning by TUPU is adopted in evaluation. |

| Uface | trained network with arcface loss. tried some different methods in preprocessing data.In evaluation, used the cleaned test set released by iBUG_DeepInsight. |

| ULUFace | Deep Residual Learning for Image Recognition https://arxiv.org/abs/1512.03385v1 MTCNN:Joint Face Detection and Alignment using Multi-task Cascaded Convolutional Networks https://arxiv.org/abs/1604.02878 FaceNet: A Unified Embedding for Face Recognition and Clustering https://arxiv.org/abs/1503.03832 SphereFace: Deep Hypersphere Embedding for Face Recognition https://arxiv.org/abs/1704.08063 Additive Margin Softmax for Face Verification https://arxiv.org/abs/1801.05599v2 ArcFace: Additive Angular Margin Loss for Deep Face Recognition https://arxiv.org/abs/1801.07698v1 |

| ULUFace | Deep Residual Learning for Image Recognition https://arxiv.org/abs/1512.03385v1 MTCNN:Joint Face Detection and Alignment using Multi-task Cascaded Convolutional Networks https://arxiv.org/abs/1604.02878 FaceNet: A Unified Embedding for Face Recognition and Clustering https://arxiv.org/abs/1503.03832 SphereFace: Deep Hypersphere Embedding for Face Recognition https://arxiv.org/abs/1704.08063 Additive Margin Softmax for Face Verification https://arxiv.org/abs/1801.05599v2 ArcFace: Additive Angular Margin Loss for Deep Face Recognition https://arxiv.org/abs/1801.07698v1 |

| Jian24 Vision | we only use a single model trained on a ResNet-101 network joined with cosine loss and triplet loss on MS-Celeb-1M(74K identities, 4M images), refer to Joint Face Detection and Alignment using Multi-task Cascaded Convolutional Networks https://arxiv.org/abs/1604.02878 ArcFace: Additive Angular Margin Loss for Deep Face Recognition https://arxiv.org/abs/1801.07698 SphereFace: Deep Hypersphere Embedding for Face Recognition https://arxiv.org/abs/1704.08063 A Discriminative Deep Feature Learning Approach for Face Recognition https://ydwen.github.io/papers/WenECCV16.pdf |

| sophon | using insightface with loss modification. |

| MSU_Intsys | Custom version of ArcFace. |

| FeelingTech | FeelingFace, which trained with cleaned MS-Celeb-1M dataset |

| KANKAN AI Lab | We have trained our model on ResNet-152 with Additive Angular Margin Loss on combined dataset with MS-Celeb-1M and VggFace2, and cleaned the FaceScrub and MegaFace with the lists released by iBUG_DeepInsight. Deep Residual Learning for Image Recognition https://arxiv.org/abs/1512.03385 ArcFace: Additive Angular Margin Loss for Deep Face Recognition https://arxiv.org/abs/1801.07698 https://github.com/deepinsight/insightface VGGFace2: A dataset for recognising faces across pose and age https://arxiv.org/abs/1710.08092 MS-Celeb-1M: A Dataset and Benchmark for Large-Scale Face Recognition https://www.microsoft.com/en-us/research/wp-content/uploads/2016/08/MSCeleb-1M-a.pdf |

| Sogou | We collected and filtered millions pictures from sogou pic search engine, and also used pictures date cleaned by DeepInsight. We trained multi model by different loss functions such as combined margin loss, margin loss with focal loss and so on. In some models we divided faces into different patches so we can get features represent local feature such as mouth and even teeth. We merge the features get from different models together as the final result. |

| SenseTime PureFace(clean) | We trained the deep residual attention network(attention-56) with A-softmax to learn the face feature. The training dataset is constructed by the novel dataset building techinique, which is critical for us to improve the performance of the model. The results are the cleaned test set performance released by iBUG_DeepInsight. Wang F, Jiang M, Qian C, et al. Residual attention network for image classification[J]. arXiv preprint arXiv:1704.06904, 2017. Deng J, Guo J, Zafeiriou S. ArcFace: Additive Angular Margin Loss for Deep Face Recognition[J]. arXiv preprint arXiv:1801.07698, 2018. Liu W, Wen Y, Yu Z, et al. Sphereface: Deep hypersphere embedding for face recognition[C]//The IEEE Conference on Computer Vision and Pattern Recognition (CVPR). 2017, 1. |

| EM-DATA | arcface, https://github.com/deepinsight/insightface |

| ATLAB-FACEX (QINIU CLOUD) | A single deep Resnet model (the 'r100' configuration from insightface) trained with our own angular margin loss (an improved variant of A-Softmax, not published yet). The training set consists of nearly 6.2M images (about 96K identities). For MegaFace evaluation, we adopted the clean list released by iBUG_DeepInsight. insightface: https://github.com/deepinsight/insightface A-Softmax: https://arxiv.org/pdf/1704.08063.pdf |

| Qsdream | ArcFace: Additive Angular Margin Loss for Deep Face Recognition https://arxiv.org/abs/1801.07698 |

| PingAn AI Lab (Nanjing) | This is a single model trained by a deep ResNet network on MS-Celeb-1M,learned by a cosine loss, refer to ArcFace: Additive Angular Margin Loss for Deep Face Recognition https://arxiv.org/abs/1801.07698 SphereFace: Deep Hypersphere Embedding for Face Recognition https://arxiv.org/abs/1704.08063 We used the noises list proposed by InsightFace, at https://github.com/deepinsight/insightface/tree/master/src/megaface |

| Fiberhome AI Research Lab(Nanjing) | mtcnn: Joint Face Detection and Alignment using Multi-task Cascaded Convolutional Networks https://arxiv.org/abs/1604.02878 https://github.com/pangyupo/mxnet_mtcnn_face_detection ArcFace: Additive Angular Margin Loss for Deep Face Recognition https://arxiv.org/abs/1801.07698 https://github.com/deepinsight/insightface |

| QINIU ATLAB - FaceX V1 (iBUG cleaned data) | This feature model is an ensemble of 3 deep Resnet models (the 'r100' and 'r152' configuration from insightface[1] ) trained with our own angular margin loss (an improved variant of A-Softmax[2] , not published yet). The training set consists of 7 Million images (about 188K identities). For evaluation, we adopted the clean list released by iBUG_DeepInsight[1] . Reference: [1] insightface: https://github.com/deepinsight/insightface [2] A-Softmax: https://arxiv.org/pdf/1704.08063.pdf |

| Visual Computing-Alibaba-V1(clean) | A single model (improved Resnet-152) is trained by the supervision of combined loss functions (A-Softmax loss, center loss, triplet loss et al) on MS-Celeb-1M (84 k identities, 5.2 M images). In evaluation, we use the cleaned FaceScrub and MegaFace released by iBUG_DeepInsight. |

| Beijing Faceall Co. & BUPT(iBug cleaned) | We have trained on the Faceall-msra celebrities dataset with over 4.4 million photos. We use 6 models to ensemble the training result and use cosine distance as the distance metrics. As for loss function, we adopt A-softmax and Additive Angular margin loss during training. We evaluate our result on the iBUG-cleaned version of megaface and facescrub list. Method reference: SphereFace: Deep Hypersphere Embedding for Face Recognition https://arxiv.org/abs/1704.08063 Additive Margin Softmax for Face Verification https://arxiv.org/abs/1801.05599v2 ArcFace: Additive Angular Margin Loss for Deep Face Recognition https://arxiv.org/abs/1801.07698v1 |

| EI Networks | We build a training database of 120,000 identities and 12 million images with combination of public and private databases. We remove the overlap with Facescrub and Fgnet database from our training set. Three deep residual networks are trained (one resnet-150 like and two resnet-100 like) on 112x96 input image with multiple large margin loss functions. Each network is further finetuned using triplet loss. Output feature of three networks is concatenated and trained with metric learning for dimension reduction to a vector of size 512. |

| Uniview Technology | use the fusion model of resnet and googlenet |

| SRC-Beijing-FR(Samsung Research Institute China-Beijing) | An improved loss of sphereface with a large-scale training dataset. |

| 4paradigm | We have trained resnet101 model with large additive margin softmax loss on merged MS-Celeb-1M and Asian-Celeb and fine-tune the model with batchhard triplet loss . In evaluation, we cleaned the FaceScrub and MegaFace using noisy face images released by[1] [1]Deng J, Guo J, Zafeiriou S. ArcFace: Additive Angular Margin Loss for Deep Face Recognition[J]. 2018. |

| 4paradigm | We have trained resnet101 model with combine large margin softmax loss on merged MS-Celeb-1M and Asian-Celeb and fine-tune the model with batchhard triplet loss . In evaluation, we cleaned the FaceScrub and MegaFace using the noises list proposed by InsightFace, at https://github.com/deepinsight/insightface/tree/master/src/megaface |

| Fiberhome AI Research Lab V2(Nanjing) | Compares to our submissions named “Fiberhome AI Research Lab ”,the differences are the following: 1.we changed the face alignment method. 2.we added private datasets to train 3.we adopted a RestNet-50 network joined with cosine loss and Additive Angular Margin Loss |

| CyberLink | Joint Face Detection and Alignment using Multi-task Cascaded Convolutional Networks https://kpzhang93.github.io/MTCNN_face_detection_alignment/index.html Deep Residual Learning for Image Recognition https://arxiv.org/abs/1512.03385v1 Additive Margin Softmax for Face Verification https://arxiv.org/abs/1801.05599v4 ArcFace: Additive Angular Margin Loss for Deep Face Recognition https://arxiv.org/abs/1801.07698v1 SphereFace: Deep Hypersphere Embedding for Face Recognition https://arxiv.org/abs/1704.08063v4 We used the noises list proposed by InsightFace, at https://github.com/deepinsight/insightface/tree/master/src/megaface |

| CyberLink_mobile | Joint Face Detection and Alignment using Multi-task Cascaded Convolutional Networks https://kpzhang93.github.io/MTCNN_face_detection_alignment/index.html MobileFaceNets: Efficient CNNs for Accurate Real-Time Face Verification on Mobile Devices https://arxiv.org/abs/1804.07573v4 Additive Margin Softmax for Face Verification https://arxiv.org/abs/1801.05599v4 ArcFace: Additive Angular Margin Loss for Deep Face Recognition https://arxiv.org/abs/1801.07698v1 SphereFace: Deep Hypersphere Embedding for Face Recognition https://arxiv.org/abs/1704.08063v4 We used the noises list proposed by InsightFace, at https://github.com/deepinsight/insightface/tree/master/src/megaface |

| cyberlink_resnet-v2 | Joint Face Detection and Alignment using Multi-task Cascaded Convolutional Networks https://kpzhang93.github.io/MTCNN_face_detection_alignment/index.html Deep Residual Learning for Image Recognition https://arxiv.org/abs/1512.03385v1 ArcFace: Additive Angular Margin Loss for Deep Face Recognition https://arxiv.org/abs/1801.07698v1 SphereFace: Deep Hypersphere Embedding for Face Recognition https://arxiv.org/abs/1704.08063v4 Additive Margin Softmax for Face Verification https://arxiv.org/abs/1801.05599v4 We used the noises list proposed by InsightFace, at https://github.com/deepinsight/insightface/tree/master/src/megaface |

| Sogou AIGROUP - SFace | We collected and filtered millions pictures from sogou pic search engine and mining one hundred thousand hard negative sample, all data are cleaned by DeepInsight. We trained multi model by different loss functions such as combined margin loss, margin loss with focal loss and so on. In some models we divided faces into different patches so we can get features represent local feature, especially the tooth similarity model. We merge the features get from different models together as the final result. |

| ICARE_FACE_V1 | We have trained our model based on a deep convolutional neural network(ResNet101) with Additive Margin Softmax.We have semi-automatically cleaned the training dataset MSCeleb-1M.Particularly,in evaluation,we cleaned the FaceScrub and MegaFace with the lists released by iBUG_DeepInsight. Additive Margin Softmax for Face Verification https://arxiv.org/abs/1801.05599 Insightface clean list https://github.com/deepinsight/insightface |

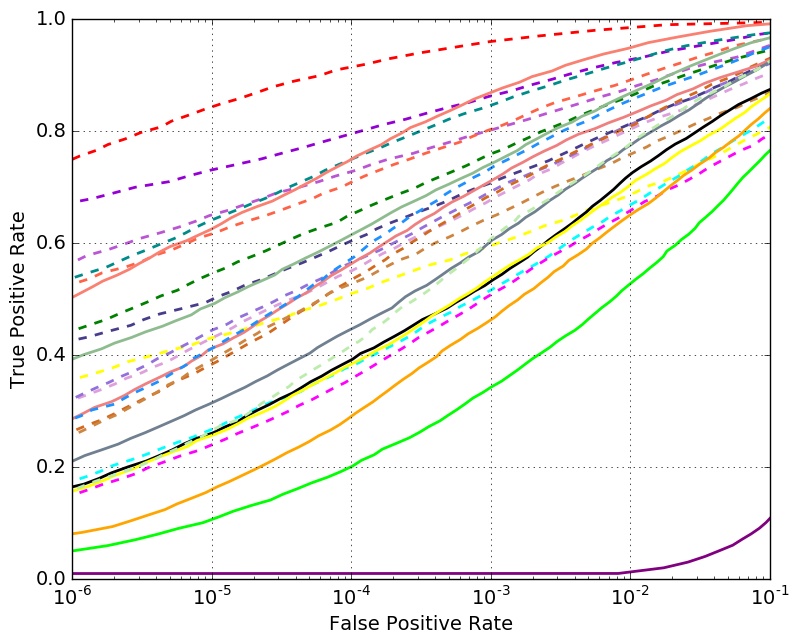

Verification Performance with 1 Million Distractors

Verifification Performance with 10K Distractors

- - uses large training set

Set 1

Set 1

- - uses large training set

Set 2

Set 2

- - uses large training set

Set 3

Set 3

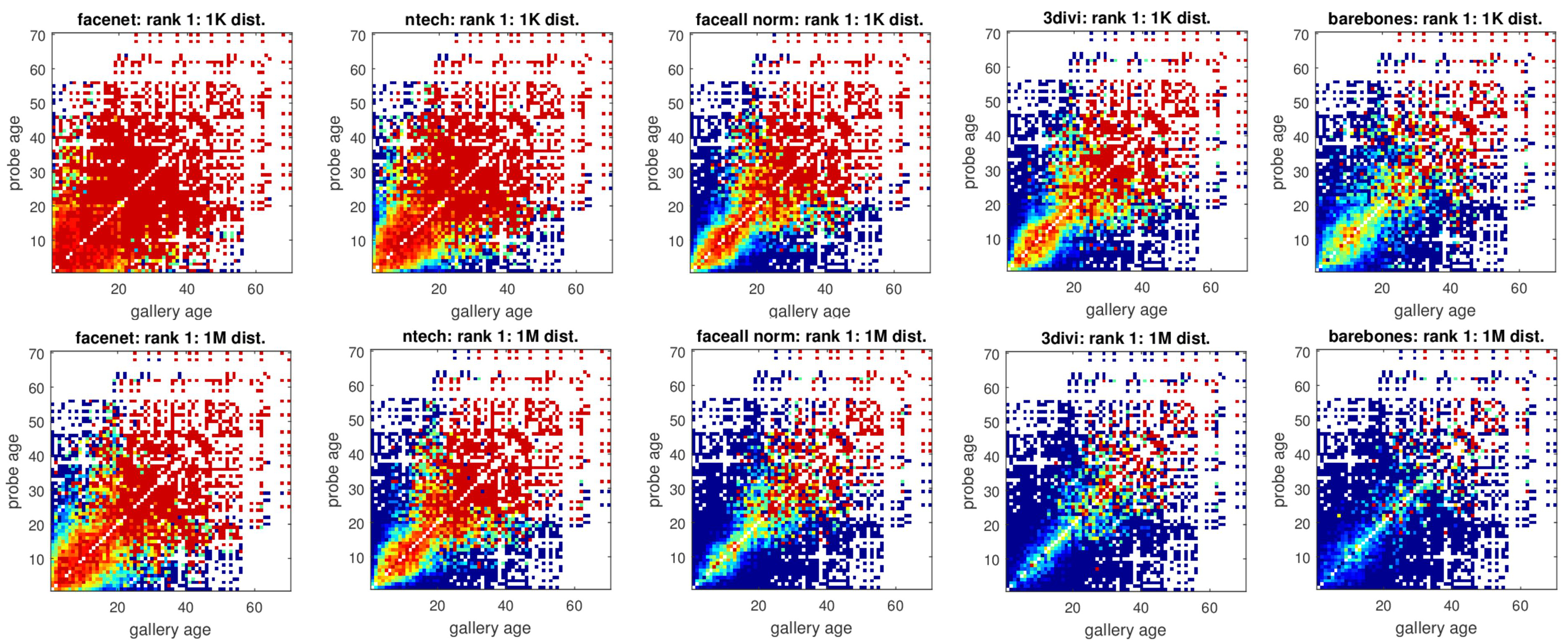

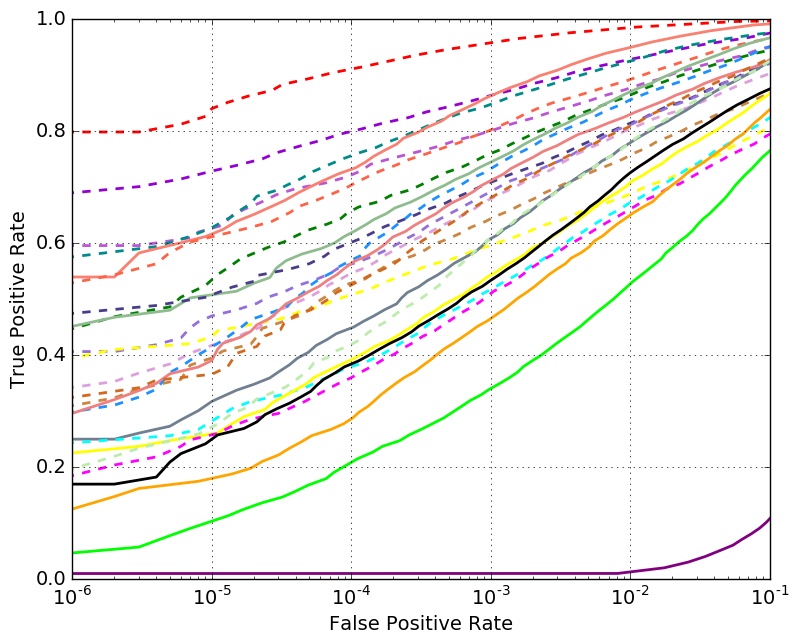

Analysis of Rank-1 Identification for Varying Ages

The colors represent identification accuracy going from 0(=blue)–none of the true pairs were matched to 1(=red)–all possible combinations of probe and gallery were matched per probe and gallery ages